CSIG图像图形技术国际在线研讨会第10期将于9月27日举办

在万物皆可虚拟化的元宇宙时代,虚拟场景规模越来越大、三维物体越来越写实、显示设备对帧率要求越来越高。在此背景下,图形渲染引擎如何支撑大规模复杂场景的高性能、高真实感渲染?以神经渲染和可微渲染为代表的新一代渲染技术如何改进现有的渲染管线,以满足越来越广泛的图形应用的需求?围绕这一主题,拉斯维加斯手机娱乐网站 (CSIG)将于2023年9月27日(周三)09:00-11:00举办CSIG图像图形技术国际在线研讨会第10期(High-quality Rendering)。会议邀请了来自中美的3位国际知名学者来介绍图形渲染领域的最新研究成果,从真实感渲染、神经渲染、可微渲染三方面展开讨论,并围绕该领域当下挑战及未来趋势进行展望。期待学术与工业界同行的积极参与!

主办单位:拉斯维加斯手机娱乐网站 (CSIG)

承办单位:CSIG国际合作与交流工作委员会

会议时间:2023年9月27日(周四)09:00-11:00

腾讯会议:184-394-442

会议直播:https://meeting.tencent.com/dm/mt0JNsTAwyG5或长按以下二维码进入

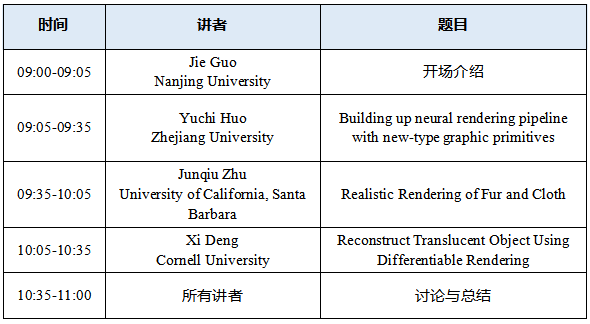

会议日程

讲者简介

Yuchi Huo

Zhejiang University

Yuchi Huo is an assistant professor (hundred-program researcher) at the State Key Lab of CAD&CG, Zhejiang University, embracing an interdisciplinary interest in ‘light’ across computer graphics, computer vision, machine learning, and computational optics. His recent achievements have been presented in Nature Communications, ACM TOG, SIGGRAPH, CVPR, NIPS, etc., covering physical rendering, real-time rendering, neural rendering, 3D reconstruction, AIGC, optical neural network, XR, multimodal vision, 3D vision, and image/pixel retrieval.

Talk title: Building up neural rendering pipeline with new-type graphic primitives

Abstract: How to represent and render 3D worlds is the essential challenge of computer graphics. In the last decades, the real-time rendering pipeline based on 3D mesh primitives has fundamentally defined the context of computer graphics. However, the recent innovations of new-type graphic primitives like NeRF and implicit SDF present unprecedented lightweight, realism, and flexibility to create digital content, revealing new possibilities for producing next-generation graphics standards. In this talk, I will introduce our recent research in this direction, involving 3D reconstruction, relighting, editing, generation (AIGC), and most importantly, how to build up a new rendering pipeline upon these new-type graphic primitives.

Junqiu Zhu

University of California, Santa Barbara

Junqiu Zhu is a postdoc at the UCSB Center for Interactive and Visual Computing (CIVC), advised by Prof. Lingqi Yan. Her field of research is physically-based computer graphics, with a focus on photorealistic rendering and appearance modeling for complex materials. She received her Ph.D. degree in Software Engineering from Shandong University, supervised by Prof. Xiangxu Meng, and co-supervised by Prof. Lu Wang and Prof. Yanning Xu.

Talk title: Realistic Rendering of Fur and Cloth

Abstract: The rendering of fur and cloth has been an active research topic in computer graphics for decades. The challenge boils down to representing the complex geometry and optics. Both animal fur and cloth are composed of a vast number of fibers presents challenges in modeling and storage. During rendering, considering the optical properties of a single fiber is complex enough, not to mention that taking into account multiple bounces makes the rendering results more difficult to converge.

We aim to ensure the realism of fur and cloth rendering while achieving efficient rendering at the same time. In this talk, I will introduce our three related works. The first work develops a method that dynamically adjusts the detail level of animal fur rendering based on viewing distances. The second work presents a cloth rendering model that adeptly portrays ply-level geometry and optics, while only necessitating yarn-level geometry modeling. The third work presents a simplified cloth model that bypasses fiber geometry and only uses surface geometry, yet successfully maintains a realistic cloth appearance.

The works introduced in this talk have been successfully applied in the industry. Our yarn-based cloth model has been integrated into Weta's proprietary Manuka rendering engine, and the movie "Avatar: The Way of Water" utilized our model. The related paper also won the "EGSR 2023 Best Paper Award" and the "EGSR 2023 Best Visual Award". Our surface-based model is also in the process of being integrated into Meta's product workflows.

Xi Deng

Cornell University

Xi Deng is a Ph.D candidate in Graphics and Vision Group at Cornell University advised by Dr. Steve Marschner. Her main research interests are physically-based light transport simulation, inverse rendering and material model reconstruction.

Talk title: Reconstruct Translucent Object Using Differentiable Rendering

Abstract: Inverse rendering is a powerful approach to modeling objects from photographs, and we extend previous techniques to handle translucent materials that exhibit subsurface scattering. Representing translucency using a heterogeneous bidirectional scattering-surface reflectance distribution function (BSSRDF), we extend the framework of path-space differentiable rendering to accommodate both surface and subsurface reflection. We use this differentiable rendering method in an end-to-end approach that jointly recovers heterogeneous translucent materials (represented by a BSSRDF) and detailed geometry of an object (represented by a mesh) from a sparse set of measured 2D images in a coarse-to-fine framework incorporating Laplacian preconditioning for the geometry. To efficiently optimize our models in the presence of the Monte Carlo noise introduced by the BSSRDF integral, we introduce a dual-buffer method for evaluating the L2 image loss. This efficiently avoids potential bias in gradient estimation due to the correlation of estimates for image pixels and their derivatives and enables correct convergence of the optimizer even when using low sample counts in the renderer.

主持人简介

Jie Guo

Nanjing University

Dr. Jie Guo is an associate researcher in the Department of Computer Science and Technology at Nanjing University. He received his PhD from Nanjing University in 2013. His current research interest is mainly in computer graphics and virtual reality. He has over 70 publications in internationally leading conferences (SIGGRAPH, CVPR, ICCV, ECCV, IEEE VR, etc.) and journals (ACM ToG, IEEE TVCG, IEEE TIP, etc.). He has developed several applications on illumination prediction, material prediction and real-time rendering, which have been widely used in industry and achieved good economic and social benefits. He is the recipient of JSCS Youth Science and Technology Award, JSIE Excellent Young Engineer Award, Huawei Spark Award, 4D ShoeTech Young Scholar Award and Lu Zengyong CAD&CG High-Tech Award.

Copyright © 2025 拉斯维加斯手机娱乐网站 京公网安备 11010802035643号 京ICP备12009057号-1

地址:北京市海淀区中关村东路95号 邮编:100190