CVPR国际论文在线预分享会第1期(深度视觉建模与分析)将于5月10日举办

主办单位:拉斯维加斯手机娱乐网站 (CSIG)

承办单位:CSIG国际合作与交流工作委员会、CSIG青年工作委员会

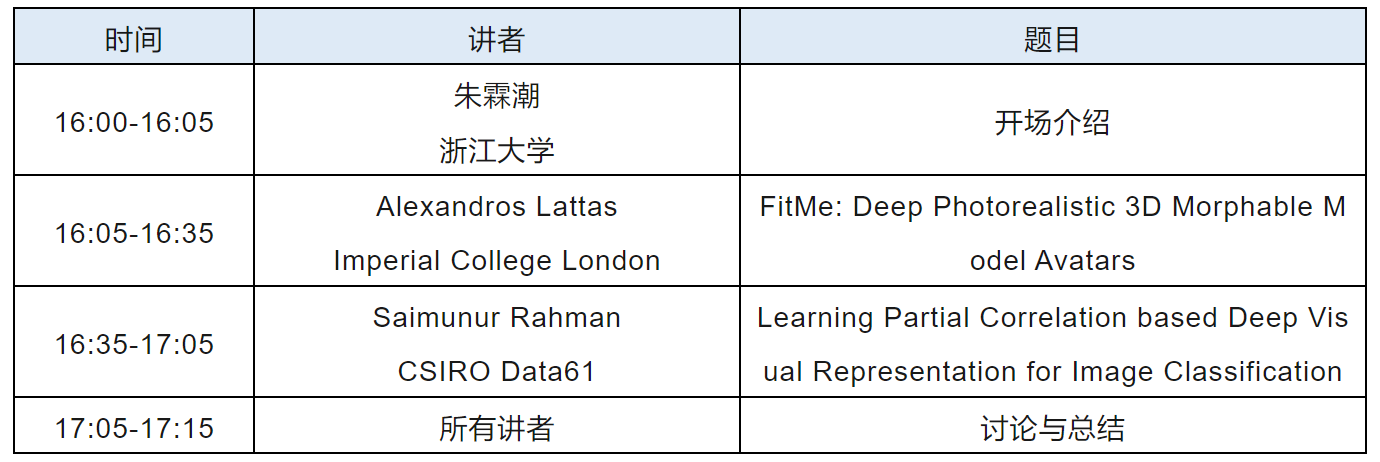

会议时间:2023年5月10日(周三)北京时间16:00-17:00

腾讯会议:477-014-796

会议直播: 本次活动将在CSIG官方视频号进行直播,欢迎关注CSIG官方视频号,点击预约即可到时观看。

会议日程

主持人:朱霖潮(浙江大学)

讲者简介

Alexandros Lattas

Imperial College London

Alexandros Lattas is researching how AI can generate realistic virtual humans, as a PhD student at Imperial College London. His PhD is under the supervision of Prof Stefanos Zafeiriou and Prof Abhijeet Ghosh. He is currently also a Researcher in Computer Vision at Huawei UK R&D. Alexandros studied Computer Science and Innovation Management at Imperial College London, University College London and the Athens University of Economics and Business. His research interests include photorealistic 3D human modeling with deep learning and 3D computer vision and graphics.

Talk title: FitMe: Deep Photorealistic 3D Morphable Model Avatars

Abstract: In this paper, we introduce FitMe, a facial reflectance model and a differentiable rendering optimization pipeline, that can be used to acquire high-fidelity renderable human avatars from single or multiple images. The model consists of a multi-modal style-based generator, that captures facial appearance in terms of diffuse and specular reflectance, and a PCA-based shape model. We employ a fast differentiable rendering process that can be used in an optimization pipeline, while also achieving photorealistic facial shading. Our optimization process accurately captures both the facial reflectance and shape in high-detail, by exploiting the expressivity of the style-based latent representation and of our shape model. FitMe achieves state-of-the-art reflectance acquisition and identity preservation on single “in-the-wild” facial images, while it produces impressive scan-like results, when given multiple unconstrained facial images pertaining to the same identity. In contrast with recent implicit avatar reconstructions, FitMe requires only one minute and produces relightable mesh and texture-based avatars, that can be used by end-user applications.

Saimunur Rahman

Saimunur Rahman is a postdoctoral research fellow at CSIRO Data61. He obtained his PhD from the University of Wollongong and CSIRO Data61. He received his M.Sc. (by Research) in Computer Vision and B.Sc. in Computer Science & Engineering. His current research interests include computer vision, robotics and machine learning. He regularly reviews papers from top artificial intelligence conferences such as CVPR, ECCV, ACM MM etc., and serves on the program committees of various international conferences.

Talk title: Learning Partial Correlation based Deep Visual Representation for Image Classification

Abstract: Visual representation based on covariance matrix has demonstrates its efficacy for image classification by characterising the pairwise correlation of different channels in convolutional feature maps. However, pairwise correlation will become misleading once there is another channel correlating with both channels of interest, resulting in the "confounding'' effect. For this case, "partial correlation'' which removes the confounding effect shall be estimated instead. Nevertheless, reliably estimating partial correlation requires to solve a symmetric positive definite matrix optimisation, known as sparse inverse covariance estimation (SICE). How to incorporate this process into CNN remains an open issue. In this work, we formulate SICE as a novel structured layer of CNN. To ensure end-to-end trainability, we develop an iterative method to solve the above matrix optimisation during forward and backward propagation steps. Our work obtains a partial correlation based deep visual representation and mitigates the small sample problem often encountered by covariance matrix estimation in CNN. Computationally, our model can be effectively trained with GPU and works well with a large number of channels of advanced CNNs. Experiments show the efficacy and superior classification performance of our deep visual representation compared to covariance matrix based counterparts.

主持人简介

朱霖潮,浙江大学百人计划研究员、博士生导师,入选国家级青年人才项目。本科毕业于浙江大学,博士毕业于悉尼科技大学,曾担任悉尼科技大学讲师。研究方向主要为跨媒体智能及其应用、时序建模、人工智能交叉研究等。曾获得美国国家标准总局TRECVID LOC 2016、THUMOS 2015动作识别、EPIC-KITCHENS 2019/2020动作识别、MABe 2022多智能体行为建模比赛冠军等10余项国际学术竞赛冠军。曾获谷歌学术研究奖(2021),担任Visual Informatics副编辑、Neurocomputing客座编辑、IEEE MLSP领域主席(2021),作为程序主席组织CVPR专题研讨会(2021,2022,2023)、ICME特别研讨会(2022)等。

Copyright © 2025 拉斯维加斯手机娱乐网站 京公网安备 11010802035643号 京ICP备12009057号-1

地址:北京市海淀区中关村东路95号 邮编:100190