CSIG图像图形技术国际在线研讨会第三期将于3月30日举办

作为新一代人工智能的核心组成部分,具身智能(Embodied AI)强调人的认知和智力活动不仅仅是大脑孤立的计算,而是大脑、身体和环境的相互作用,智能体需要与环境进行交互并通过反馈进一步让智能体学习并使其更智能,因此,具身智能的发展离不开以图像图形技术为基础的场景数字化建模和交互模拟。

围绕这一主题,拉斯维加斯手机娱乐网站 (CSIG)将于2022年3月30日(周三)09:00-12:00举办CSIG图像图形技术国际在线研讨会第三期(Embodied AI专题)。会议邀请了来自美国、加拿大和中国4位国际知名学者来介绍具身智能领域的最新研究成果,并围绕该领域当下挑战及未来趋势开展讨论。期待学术与工业界同行的积极参与!

主办单位:拉斯维加斯手机娱乐网站 (CSIG)

承办单位:CSIG国际合作与交流工作委员会、女科技工作者工作委员会

会议时间:2022年3月30日(周三)9:00-12:00

腾讯会议:182-324-517

会议直播:https://meeting.tencent.com/l/PTatQESfNEHf

或长按以下二维码进入

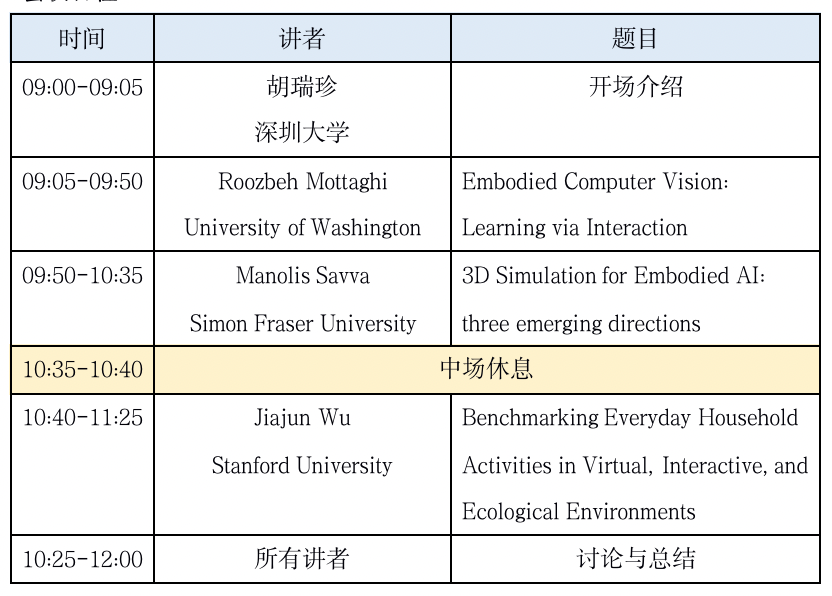

会议日程

讲者简介

Roozbeh Mottaghi

University of Washington

Roozbeh Mottaghi is the Research Manager of the Perceptual Reasoning and Interaction Research (PRIOR) group at AI2 and an Affiliate Associate Professor in Paul G. Allen School of Computer Science and Engineering at the University of Washington. Prior to joining AI2, he was a post-doctoral researcher in the Computer Science Department at Stanford University. He obtained his PhD in Computer Science in 2013 from the University of California, Los Angeles. His research is mainly focused on Computer Vision and Machine Learning. More specifically, he is interested in Embodied AI, physical reasoning via perception, and learning via interaction.

Talk title: Embodied Computer Vision: Learning via Interaction

Abstract: The re-emergence of deep neural networks has led to significant progress in computer vision over the past decade. We now have robust methods and architectures for image classification, object detection, and other core computer vision tasks. While these tasks form the foundation of computer vision, they are not the end goal. It is now time to take a step further and develop the next generation of tasks: tasks that require reasoning beyond pattern recognition. A popular class of these tasks is Embodied AI tasks that require an agent to understand the dynamics of the world around it, interact with the surrounding environment, and learn from its interactions.

I will talk about the issues associated with our current view of data, models, and tasks and how we should adapt to the new paradigm in computer vision. I will talk about the recent advances in AI2-THOR, our platform for Embodied AI, enabling a plethora of Embodied tasks such as learning physics, interactive instruction following, and commonsense reasoning. I will next focus on a recent work that shows minimal interaction with the environment improves a state-of-the-art object detector by about 12 points in AP (it takes the object detection community about two years to obtain such improvement). This shows an example that interaction with the environment is the key to improving computer vision models further.

Manolis Savva

Simon Fraser University

Manolis Savva is an Assistant Professor of Computing Science at Simon Fraser University, and a Canada Research Chair in Computer Graphics. His research focuses on analysis, organization and generation of 3D content, forming a path to holistic 3D scene understanding revolving around people, and enabling applications in computer graphics, computer vision, and robotics. Prior to his current position he was a visiting researcher at Facebook AI Research, and a postdoctoral researcher at Princeton University. He received his Ph.D. from Stanford University under the supervision of Pat Hanrahan, and his B.A. in Physics and Computer Science from Cornell University. The impact of his work has been recognized by a number of awards including the SGP 2020 Dataset Award for ScanNet, an ICCV 2019 Best Paper Award Nomination for Habitat, and the SGP 2018 Dataset Award for ShapeNet.

Talk title: 3D Simulation for Embodied AI: three emerging directions

Abstract: 3D simulators are increasingly being used to develop and evaluate "embodied AI" (agents perceiving and acting in realistic environments). Much of the prior work in this space has treated simulation platforms as "black boxes" within which learning algorithms are to be deployed. However, the design choices and resulting system characteristics of the simulation platforms themselves can greatly impact both the feasibility and the outcomes of experiments involving simulation. In this talk, I will describe a number of recent projects that outline three emerging directions for 3D simulation platforms.

Jiajun Wu

Stanford University

Jiajun Wu is an Assistant Professor of Computer Science at Stanford University, working on computer vision, machine learning, and computational cognitive science. Before joining Stanford, he was a Visiting Faculty Researcher at Google Research. He received his PhD in Electrical Engineering and Computer Science at Massachusetts Institute of Technology. Wu's research has been recognized through the ACM Doctoral Dissertation Award Honorable Mention, the AAAI/ACM SIGAI Doctoral Dissertation Award, the MIT George M. Sprowls PhD Thesis Award in Artificial Intelligence and Decision-Making, the 2020 Samsung AI Researcher of the Year, the IROS Best Paper Award on Cognitive Robotics, and faculty research awards and graduate fellowships from Samsung, Amazon, Facebook, Nvidia, and Adobe.

Talk title: Benchmarking Everyday Household Activities in Virtual, Interactive, and Ecological Environments

Abstract: In this talk, I'll present BEHAVIOR, a benchmark for embodied AI with 100 activities in simulation, spanning a range of everyday household chores such as cleaning, maintenance, and food preparation. These activities are designed to be realistic, diverse, and complex, aiming to reproduce the challenges that agents must face in the real world. Building such a benchmark poses three fundamental difficulties for each activity: definition (it can differ by time, place, or person), instantiation in a simulator, and evaluation. BEHAVIOR addresses these with (1) an object-centric, predicate logic-based description language for expressing an activity's initial and goal conditions, (2) simulator-agnostic features required by an underlying environment to support BEHAVIOR, and (3) a set of metrics to measure task progress and efficiency. I will also demonstrate a realization of the BEHAVIOR benchmark in the iGibson simulator, as well as various benchmarking results.

主持人简介

胡瑞珍

深圳大学

Ruizhen Hu is an Associate Professor at Shenzhen University, China. She received her Ph.D. from the Department of Mathematics, Zhejiang University. Before that, she spent two years visiting Simon Fraser University, Canada. Her research interests are in computer graphics, with a recent focus on applying machine learning to advance the understanding and modeling of visual data. She received the Asia Graphics Young Researcher Award in 2019. She has served as a program co-chair for SMI 2020, and is an editorial board member of The Visual Computer and IEEE CG&A.

Copyright © 2025 拉斯维加斯手机娱乐网站 京公网安备 11010802035643号 京ICP备12009057号-1

地址:北京市海淀区中关村东路95号 邮编:100190